Reading log files into Azure Log Analytics Workspace from external data sources and Storage Accounts

Azure Log Analytics is a great tool. Building queries with Kusto and using them for Alerts, Dashboards and other automation is pretty easy especially with its advanced capabilities. There are several great features which are not very commonly known, one of them being the ability to access logs from external sources and run Kusto queries. In this article I will demonstrate how easy it is to connect to an external source and process logs. We are going to use the “externaldata” operator details for which is available here

Let us imagine you have a log file on a webserver or even on a Storage account. With externaldata operator you can easy fetch the log file and write queries against the log file. Externaldata operator does work for multiple files to make information available as a single data set. In the below example I have used a json file on Blob Storage. The file has the following column headers

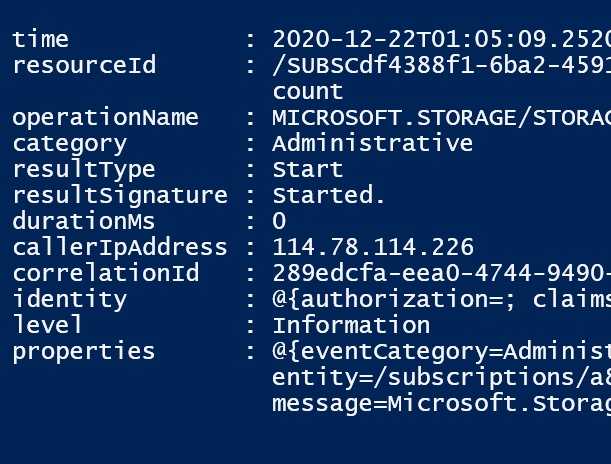

To investigate the headers to build a Kusto query in log analytics I downloaded a sample file from the storage account and ran the following powershell command to convert my JSON file into a PSCustomObject and investigate the first value in the set while displaying the headers/columns we are intersted in.

$json = Get-Content -Path 'd:\test.json' | ConvertFrom-JSON

$json[0]It should show the following output

From the output the column headers appear to be time, resourceID, OperationName, Category, resultType, resultSignature, Duration, CallerIpAddress, CorrelationID, identity, level and properties. We will need these to build a kusto query.

As I mentioned earlier I have my files in a storage account I will need a URL with SAS token to access these files in the “externaldata” operator. In case of a Storage Account in Azure you can easily pick the one from the portal or build one if you know the file location and the SAS token.

The URL I am going to use is

“https://teststorageaccount.blob.core.windows.net/insights-activity-logs/resourceId=/SUBSCRIPTIONS/a8392493-08b9-44b8-9a66-ac2029dfb850/y=2020/m=12/d=22/h=01/m=00/PT1H.json?sp=r&st=2021-01-15T01:37:10Z&se=2021-01-15T09:37:10Z&spr=https&sv=2019-12-12&sr=b&sig=LZopKr7bRw1i2uerO3CT9f3thutW5rPSTQ3ekrzXnTg%3D”

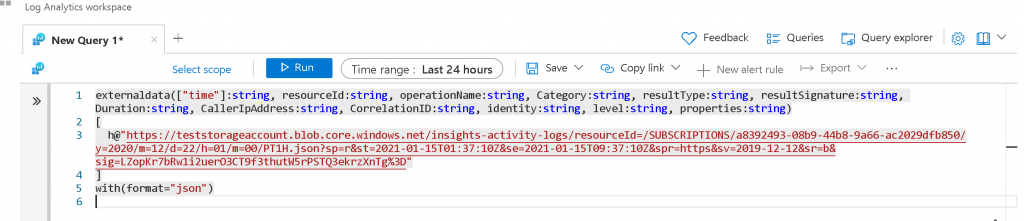

We now have everything we need to build a Kusto Query in Log analytics. The kusto query in log analytics would be

externaldata(["time"]:string, resourceId:string, operationName:string, Category:string, resultType:string, resultSignature:string, Duration:string, CallerIpAddress:string, CorrelationID:string, identity:string, level:string, properties:string)

[

h@"https://teststorageaccount.blob.core.windows.net/insights-activity-logs/resourceId=/SUBSCRIPTIONS/a8392493-08b9-44b8-9a66-ac2029dfb850/y=2020/m=12/d=22/h=01/m=00/PT1H.json?sp=r&st=2021-01-15T01:37:10Z&se=2021-01-15T09:37:10Z&spr=https&sv=2019-12-12&sr=b&sig=LZopKr7bRw1i2uerO3CT9f3thutW5rPSTQ3ekrzXnTg%3D"

]

with(format="json")Screenshot

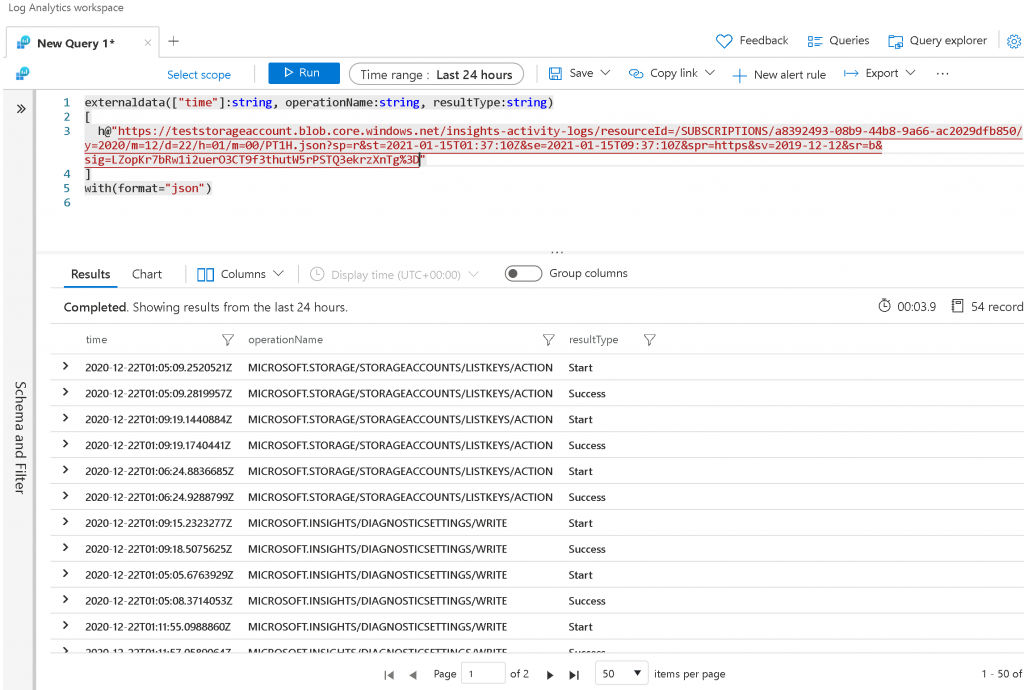

And the results

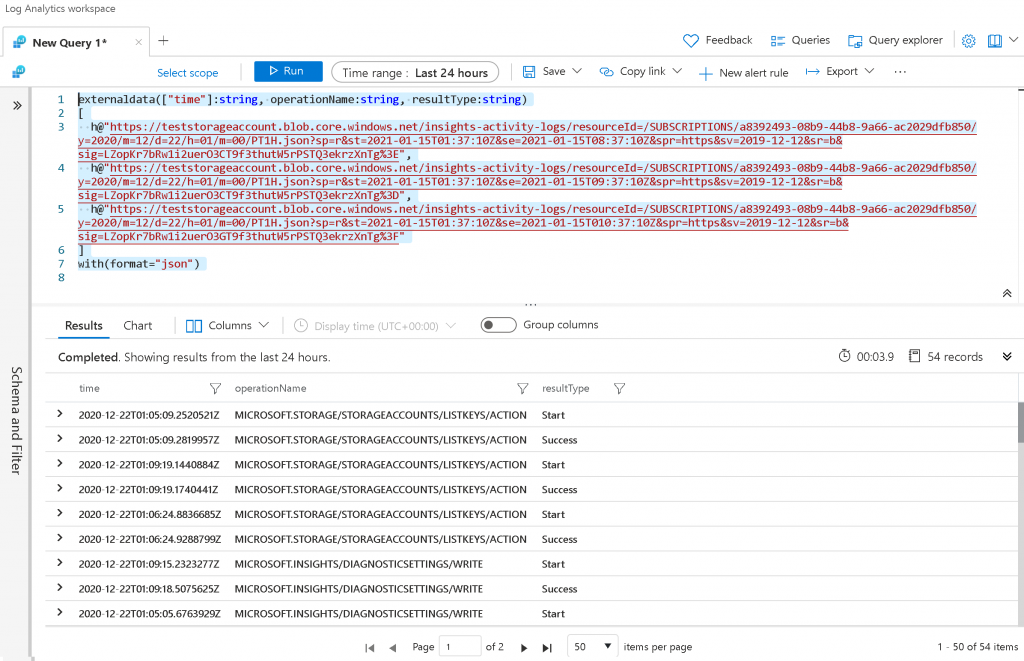

If you want to process multiple files in a single query to get a single set of results the format should be a comma separated list of URL’s as given in the below sample

externaldata(["time"]:string, operationName:string, resultType:string)

[

h@"https://teststorageaccount.blob.core.windows.net/insights-activity-logs/resourceId=/SUBSCRIPTIONS/a8392493-08b9-44b8-9a66-ac2029dfb850/y=2020/m=12/d=22/h=01/m=00/PT1H.json?sp=r&st=2021-01-15T01:37:10Z&se=2021-01-15T08:37:10Z&spr=https&sv=2019-12-12&sr=b&sig=LZopKr7bRw1i2uerO3CT9f3thutW5rPSTQ3ekrzXnTg%3E",

h@"https://teststorageaccount.blob.core.windows.net/insights-activity-logs/resourceId=/SUBSCRIPTIONS/a8392493-08b9-44b8-9a66-ac2029dfb850/y=2020/m=12/d=22/h=01/m=00/PT1H.json?sp=r&st=2021-01-15T01:37:10Z&se=2021-01-15T09:37:10Z&spr=https&sv=2019-12-12&sr=b&sig=LZopKr7bRw1i2uerO3CT9f3thutW5rPSTQ3ekrzXnTg%3D",

h@"https://teststorageaccount.blob.core.windows.net/insights-activity-logs/resourceId=/SUBSCRIPTIONS/a8392493-08b9-44b8-9a66-ac2029dfb850/y=2020/m=12/d=22/h=01/m=00/PT1H.json?sp=r&st=2021-01-15T01:37:10Z&se=2021-01-15T010:37:10Z&spr=https&sv=2019-12-12&sr=b&sig=LZopKr7bRw1i2uerO3GT9f3thutW5rPSTQ3ekrzXnTg%3F"

]

with(format="json")

Screenshot

Once you have the data available to you with the “externaldata” operator, you can filter and process results as usual. There are several possibilities with the externaladata operator. You could pass the query to log analytics through REST API, Powershell or from portal and process the from external sources. The format directive can look at JSON, multijson as well as log file format.

I haven’t checked the cost for processing logs and JSON files from external data sources but I know there would be a minor cost for processing log files stored in a storage cost depending upon the storage tier used. If you find this information or if you have any comments or questions you can reach out to me at gsjutla@lessergeek.com